The Kappa statistic measures inter-rater reliability for categorical items. It is widely used to indicate the degree of agreement between the assessments provided by multiple appraisers (observers), typically during the inspection of products and equipment. Kappa calculates the ratio of the number of times appraisers actually agree that there is a defect/not a defect, to the number of times appraisers are likely going to agree.

To put the way Kappa is calculated into perspective, consider a situation where you take a multiple-choice test you didn’t study for. Even though you were unprepared and may be picking answers at random, it’s likely that you will get some answers on the test correct by chance. The kappa statistic takes this element of chance into account. During inspections, appraisers won’t necessarily agree 100%—but they will agree to some extent by chance.

The higher the Kappa’s calculated value, the stronger the agreement. The Kappa value varies between 0 and 1, where:

- 0= Agreement is equivalent to chance

- 0.10-0.20= Agreement is slightly above chance

- 0.21-0.40= Fair agreement

- 0.41-0.60= Moderate agreement

- 0.61-0.80= Substantial agreement

- 0.81-0.99= Near-perfect agreement

- 1= Perfect agreement

Negative values may occur when the agreement is less than expected by chance, however this rarely happens.

Typically, Kappas are used during visual inspection to identify defects throughout the manufacturing process. Some companies conduct Kappa studies during these inspections. Kappa studies enable you to understand whether an appraiser is in agreement with their colleagues or with a value of reference, which is typically the standard provided by an expert source. During a Kappa study, companies calculate the Kappa between their colleagues, then compare this to the known standard.

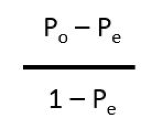

The formula for calculating kappa is:

Where Po is the actual probability of agreement among observers, and Pe is the expected probability of chance agreement among observers.

Kappa statistics and studies are helpful to businesses in that they can be used as a measure of quality assurance. This prevents defects and other mistakes in manufactured products, and prevents problems when the product is delivered to customers. In turn, this promotes better customer satisfaction and reduces waste.

Kappas can be beneficial to many different industries and are not just restricted to visual inspection within a manufacturing environment; for example, they are also commonly used in healthcare to determine how often doctors’ diagnoses of patient conditions will be in agreement. Whether used in manufacturing or not, Kappa statistics can be an important aspect to the detection of defects in products or services and within process control decisions. In fact, Kappa may have an integral role within an overall Six Sigma ideology.

Similar Glossary Terms

- Cost of Poor Quality

- Quality Assurance (QA)

- Capability

- NORMSINV

- Zero Defects

- Pearson Correlation

- Key Performance Indicators (KPI)

- Hazard Radio

- TEAM Metrics